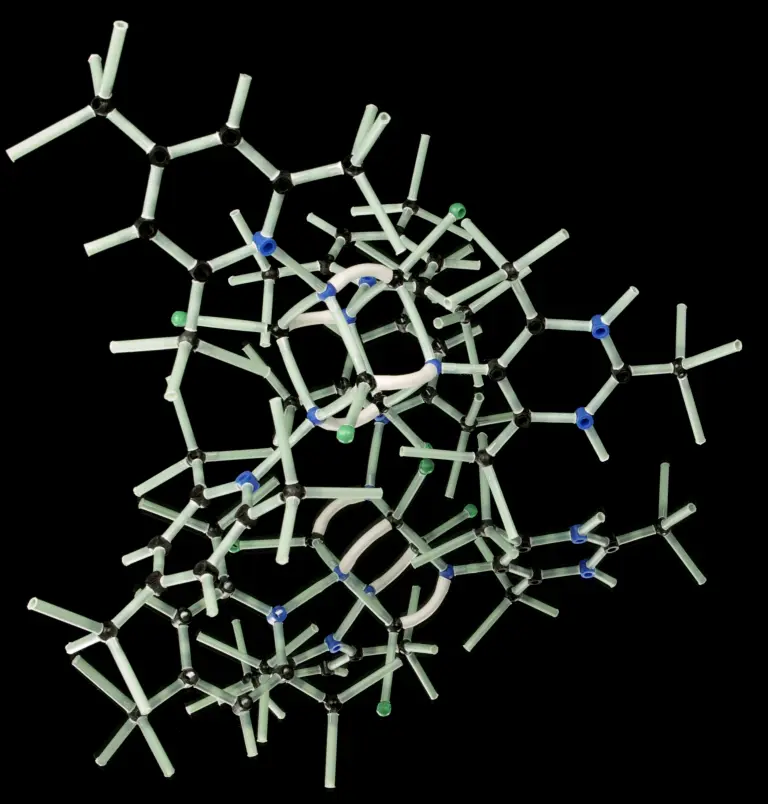

The accuracy of predictions was a central issue in the emergence of computational quantum chemistry. The prediction of the properties of particular chemical systems, real or hypothetical, requires computational methods which involve the use of approximate mathematical techniques and models. Their applicability, predictive power, and reliability were the subject of controversy for many years, because none of those methods could achieve a level of accuracy that could be compared with that of traditional chemical methods. Thus, even though computational methods had been evolving since the early 1960s, they were not universally accepted as legitimate tools for producing chemical knowledge before the 1980s.

Practitioners in computational quantum chemistry were polarized between two different approaches as to how computational methods should be constructed. Some thought that they should be based strictly on theory, while others believed that the main challenge faced by those methods was not their theoretical grounding but their direct and wide applicability to real chemical problems. The work of John Anthony Pople (1925-2004), Nobel laureate in Chemistry in 1998, was of central importance in this respect. In the 1970s Pople’s ideas were integrated into quantum chemical software, named Gaussian™, which is still popular today.

The study of the emergence of computational quantum chemistry raises important epistemological questions. Do computational methods constitute a new way of producing knowledge? How can one define the limits of the reliability of a method? Is prediction based on theory or on extensive testing of the reliability of a complex method? The fact that quantum chemists have been leading users of electronic computers since the late 1950s indicates the dynamic interaction between computer technology, the construction of scientific models, and their predictive power.